If you've recently updated to Joomla 3.4 you might have noticed this message:

A change to the default robots.txt files was made in Joomla! 3.3 to allow Google to access templates and media files by default to improve SEO. This change is not applied automatically on upgrades and users are recommended to review the changes in the robots.txt.dist file and implement these change in their own robots.txt file.

So why was there a change? But first:

Why is there a robots.txt file with every Joomla distribution?

The robots.txt file indicates which parts of your site you don't want accessed by a search robot (also known as crawlers). The file is part of the Robots exclusion standard and most search engines follow it. The file for example lets the crawler know that it is allowed to visit folder xyz, but it isn't allowed to view the resources in folder abc. The protocol is purely advisory - it won't stop bots that don't adhere to that standard from indexing those resources.

Let's have a look at the robots.txt included with Joomla 3.4:

# If the Joomla site is installed within a folder such as at

# e.g. www.example.com/joomla/ the robots.txt file MUST be

# moved to the site root at e.g. www.example.com/robots.txt

# AND the joomla folder name MUST be prefixed to the disallowed

# path, e.g. the Disallow rule for the /administrator/ folder

# MUST be changed to read Disallow: /joomla/administrator/

#

# For more information about the robots.txt standard, see:

# http://www.robotstxt.org/orig.html

#

# For syntax checking, see:

# http://tool.motoricerca.info/robots-checker.phtml

User-agent: *

Disallow: /administrator/

Disallow: /bin/

Disallow: /cache/

Disallow: /cli/

Disallow: /components/

Disallow: /includes/

Disallow: /installation/

Disallow: /language/

Disallow: /layouts/

Disallow: /libraries/

Disallow: /logs/

Disallow: /modules/

Disallow: /plugins/

Disallow: /tmp/

The lines that have the hash sign # are comments meant for humans. They are ignored by the bots that read the file.

The "user-agent: *" line means that the section that follows applies to all robots. The disallow lines instructs the robot which directories it shouldn't visit.

Keep in mind that search engines are greedy. They want to index everything that they find on your site. If those lines weren't in the robots.txt search engines would start to index resources everywhere and this is not what you would really want with a Joomla website. (at the end you don't want links to /components/com_something)

Why was the robots.txt file been updated?

Last year Google announced that they are now finally able to execute Javascript on your pages. Which means that they've started to render your pages more like the user's browser and index it afterwards. (in the past only the content that was present on the page when the page was loaded was indexed).

Recently Google also announced that they are going to serve more mobile friendly pages in the search content. So if you are on your phone searching for something and you have 2 pages that can give you an answer - Google will give some points more to the page that is optimised for a mobile device and show this one first.

So what do the above announcements have to do with the robots.txt? Well, Google can't execute javascript, or read your css files if they are in a folder that is disallowed in the robots.txt file. That was the case with the media and templates folders. The media folder is used by extension developers to store their js & css recources & the templates folder is obviously used by your template...

Why is the robots.txt file not been updated automatically with a Joomla update?

Well, that would be really tricky! Imagine that you've made some changes to the file - do you want to see your changes overridden by a Joomla update? I bet not! That is why instead of overriding the file Joomla is distributed with a robots.txt.dist file where you can see how the new files looks. You could then compare your robots.txt with the robots.txt.dist file and make the modifications yourself.

Do I need to make any other modifications to the robots.txt in order for search engines to properly index my Joomla site?

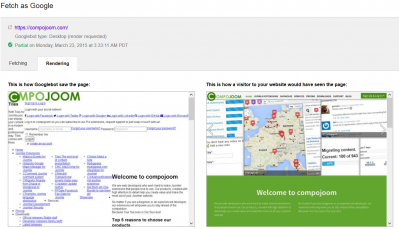

Most probably yes! What you should definetely do is - navigate to the Google webmaster tool (I hope you already use it, if not it's time to start). Select the site you want to inspect and then go to "Crawl" -> "Fetch as Google" option. Enter the URL for inspection and select the "Fetch & render" option. Once Google has fetched your site you'll be able to see how it also see it. Here is an example how compojoom looked like at first:

Below the rendering result you also have a list with reasons why google isn't able to render the page 1:1 as the user's browser.

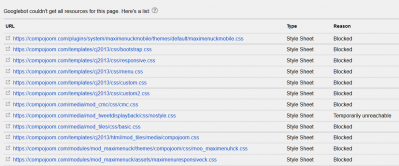

As you can see from the screenshot google wasn't able to access resources in plugins, media & templates folder. Now with the latest robots.txt media & templates should not be disallowed what was left to fix is the plugins folder. The plugins folder is still disallowed and you should leave it like this. What you could do instead is to add allow lines and point directly to the resources that you want google to be able to index:

Disallow: /plugins/

Allow: /plugins/content/sigplus/css

Allow: /plugins/content/sigplus/engines

Allow: /plugins/system/maximenuckmobile/themes

Allow: /plugins/system/maximenuckmobile/assets

With those 4 lines I've specified exactly which folders are allowed to be indexed. Here is the content of our robots.txt just for reference:

User-agent: *

Disallow: /administrator/

Disallow: /bin/

Disallow: /cache/

Allow: /cache/com_comment/cache

Allow: /cache/thumbs

Allow: /cache/preview

Disallow: /cli/

Disallow: /components/

Allow: /components/com_socialconnect/js

Allow: /components/com_easyblog/assets

Allow: /components/com_easyblog/themes

Allow: /components/com_kunena/template

Disallow: /includes/

Disallow: /installation/

Disallow: /language/

Disallow: /layouts/

Disallow: /libraries/

Disallow: /logs/

Disallow: /modules/

Allow: /modules/mod_maximenuck/themes

Allow: /modules/mod_maximenuck/assets

Disallow: /plugins/

Allow: /plugins/content/sigplus/css

Allow: /plugins/content/sigplus/engines

Allow: /plugins/system/maximenuckmobile/themes

Allow: /plugins/system/maximenuckmobile/assets

Disallow: /tmp/

If you've looked carefully you should have noticed that we've allowed few more recources as well. For example: /cache/com_comment/cache. This folder belongs to CComment. CComment minifies all js files that it uses and stores the minified version in the cache folder. Since Joomla has a general rule to disallow everything in the cache folder we had to help a little and explicitly allow this resource.

There is no global robots.txt file that could work on all Joomla sites due to the different kind of extensions installed. So what I would advise you here is to render your site with Google webmaster tool and check what the result is. Then just allow the necessary resources untill you get the necessary result. This requires some time, but at the end you'll have a site that is better indexed by google.

Anything else I need to know?

I advise you to make the modifications. The sooner you do them, the sooner Google & other search engines will find the data that is hidden behind ajax calls. A good example is Hotspots. When we render the map we fetch the information about the current results is loaded with an ajax call. In the past Google wasn't able to find the Hotspots that users were seeing on the site. But since the update Google renders the page as the user's browser and is now able to index all Hotspots.